Thanksgiving

is a joyous invitation to shower the world with love and gratitude. Forever on Thanksgiving the heart will find the pathway home. The more you practice the

art of thankfulness.

Wish you a very happy and blessed Thanksgiving!

Thanksgiving

is a joyous invitation to shower the world with love and gratitude. Forever on Thanksgiving the heart will find the pathway home. The more you practice the

art of thankfulness.

Wish you a very happy and blessed Thanksgiving!

A threat is taking over the world today. SARS-CoV-2 is a virus that spread throughout the planet, behaviorally changing world society. Humanity seeks alternatives to increase the physical barrier associated with the protection of homemade masks.Viruses, which have a semantic origin in the Latin, “toxin” or “poisonous”, are infectious agents that mostly assume a nanometric scale, with a size around 20-300 nm in diameter.An abiotic material, capable of inhibiting the spread of viruses is indispensable. Understanding the virus’s adhesion to the surface of the textile is very important for the choice of the best tissue, which has less adhesion of the virus to the surface. This minimization of virus adhesion can be promoted by the modification of surface characteristics of the textiles. The addition of nanostructures is capable of presenting antimicrobial activity, an essential factor for obtaining efficient textiles for making homemade masks.

Read more about this article: https://lupinepublishers.com/material-science-journal/fulltext/homemade-mask-how-to-protect-ourselves-from-microorganisms.ID.000159.php

Read more Lupine Publishers Google Scholar Articles: https://scholar.google.com/citations?view_op=view_citation&hl=en&user=BVzKHbAAAAAJ&citation_for_view=BVzKHbAAAAAJ:IWHjjKOFINEC

With today’s growing interest toward composite materials and their augmentation as part of integrated business from aerospace engineering, medical applications and others, which are getting increasing dependency on composite materials in recent operations. However, the most sophisticated composite materials still need to rely on the other integrated sub-sets of components. On the other hand, there certain limitation and flaws that exist within composite materials’ component that can cause and error to grow way beyond control and can impact its main master component. These sorts of limitation and flaws also would impact the engineering targets from perspective of resiliency built into the daily operations that is also pointed it out in current article.

Read more about this article: https://lupinepublishers.com/material-science-journal/fulltext/advantages-and-disadvantages-of-using-composite-laminates-in-the-industries.ID.000158.php

Read more Lupine Publishers Google Scholar Articles: https://scholar.google.com/citations?view_op=view_citation&hl=en&user=BVzKHbAAAAAJ&citation_for_view=BVzKHbAAAAAJ:ZeXyd9-uunAC

Lupine Publishers| Modern Approaches on Material Science

Companies such as Intel as a pioneer in chip design for computing are pushing the edge of computing from its present Classical Computing generation to the next generation of Quantum Computing. Along the side of Intel corporation, companies such as IBM, Microsoft, and Google are also playing in this domain. The race is on to build the world’s first meaningful quantum computer-one that can deliver the technology’s long-promised ability to help scientists do things like develop miraculous new materials, encrypt data with near-perfect security and accurately predict how Earth’s climate will change. Such a machine is likely more than a decade away, but IBM, Microsoft, Google, Intel, and other tech heavyweights breathlessly tout each tiny, incremental step along the way. Most of these milestones involve packing more quantum bits, or qubits-the basic unit of information in a quantum computer-onto a processor chip ever. But the path to quantum computing involves far more than wrangling subatomic particles. Such computing capabilities are opening a new area into dealing with the massive sheer volume of structured and unstructured data in the form of Big Data, is an excellent augmentation to Artificial Intelligence (AI) and would allow it to thrive to its next generation of Super Artificial Intelligence (SAI) in the near-term time frame.

Keywords: Quantum Computing and Computer, Classical Computing and Computer, Artificial Intelligence, Machine Learning, Deep Learning, Fuzzy Logic, Resilience System, Forecasting and Related Paradigm, Big Data, Commercial and Urban Demand for Electricity

Quantum Computing (QC) is designed and structured around the usage of Quantum Mechanical (QM) concepts and phenomena such as superposition and entanglement to perform computation. Computers that perform quantum computation are known as Quantum Computers[1-5].Note that the superposition from a quantum point of view is a fundamental principle of quantum mechanics. The Quantum Superposition (QS) states that, much like waves in Classical Mechanics (CM) or Classical Physics (CP), any two or more quantum states can be added together (“superposed”), and the result will be another valid quantum state; and conversely, that every quantum state can be represented as a sum of two or more other distinct countries.Mathematically, it refers to a property of solutions to the both Schrödinger Time-Dependent and Time-Independent Wave Equations; since the Schrödinger equation is linear, any linear combination of solutions will also be a solution.An example of a physically observable manifestation of the wave nature of quantum systems is the interference peaks from an electron beam in a double-slit experiment, as illustrated in (Figure 1).The pattern is very similar to the one obtained by the diffraction of classical waves. [6]. Quantum computers are believed to be able to solve some computational issues, such as integer factorization, which underlies RSA encryption [7], significantly faster than classical computers. The study of quantum computing is a subfield of quantum information science.

Historically, Classical Computer (CC) technology, as we know them from the past few decades to present, has involved a sequence of changes from one type of physical realization to another, and they have been evolved from main-frame of the old generation to generation of macro-computer. Now, these days, pretty much everyone owns a minicomputer in the form of a laptop, and you find these generations of computers in everyone’s house as part of their household. These mini-computers, Cemeterial Processing Units (CPUs), are based on transistors that are architected around Positive-Negative-Positive (PNP) junction.From gear to relays to valves to transistors to integrated circuits and so on we need automation and consequently augmentation of computer of some sort Today’s advanced lithographic techniques at below sub-micron innovative structure augmenting techniques such as Physical Vapor Deposition (PVD), Chemical Vapor Deposition (CVD), and Chemical Mechanical Polishing (CMP) can create chips with a feature only a fraction of micron wide. Fabricator and manufacturer these chips are pushing them to yield even smaller parts and inevitably reach a point where logic gates are so small that they are made out of only a handful of atoms size, as it is depicted in (Figure 2).

It worth mentioning that the size of the chip going way beyond sub-micron technology is limited by the wavelength of the light that is used in the lithographic technique.

On the atomic scale, matter obeys the rules of Quantum Mechanics (QM), which are quite different from Classical Mechanics (CM) or Physics Rules that determine the properties of conventional logic gates. Thus, if computers are to become smaller in the future, new, quantum technology must replace or supplement what we have new as a traditional way of computing.The point is, however, that quantum technology can offer much more than cramming more and more bits onto silicon CPU chip and multiplying the clock-speed of these traditional microprocessors. It can support an entirely new kind of computation with qualitatively new algorithms based on quantum principles! In a nutshell, in Quantum Computing, we deal with Qubits, while in Classical Computing, we deal with bits of information; thus, we need to understand “What Are Qubits?” and how it is defined, which we have presented this matter further down.Next generation of tomorrow’s computer is working based on where “Quantum Bits Compressed for the First Time.” The physicist has now shown how to encode three quantum bits, the kind of data that might be used in this new generation of computer, by just using two photons.Of course, a quantum computer is more than just its processor. These next-generation systems will also need new algorithms, software, interconnects, and several other yet-tobe- invented technologies specifically designed to take advantage of the system’s tremendous processing power-as well as allow the computer’s results to be shared or stored.

Intel introduced a 49-qubit processor code-named “Tangle Lake.” A few years ago, the company created a virtual-testing environment for quantum-computing software; it leverages the powerful “Stampede” supercomputer at The University of Texas at Austin to simulate up to a 42-qubit processor. To understand how to write software for quantum computers, however, they will need to be able to simulate hundreds or even thousands of qubits.Note that: Stampede was one of the most potent and significant supercomputers in the U.S. for open science research. Able to perform nearly ten quadrillion operations per second, Stampede offered opportunities for computational science and technology, ranging from highly parallel algorithms, highthroughput computing, scalable visualization, and next-generation programming languages, as illustrated in (Figure3) here. [8]This Dell PowerEdge cluster equipped with Intel Xeon Phi coprocessors pushed the envelope of computational capabilities, enabling breakthroughs never before imagined. Stampede was funded by the National Science Foundation (NSF) through award ACI-1134872.

Stampede was upgraded in 2016 with additional compute nodes built around the second generation of the Intel Xeon Phi many-core, x86 architecture, known as Knights Landing. The new Xeon Phi’s function as the primary processors in the new system. The upgrade ranked #116 on the June 2016 Top 500 and was the only KNL system on the list.Note that: Knights Landing (KNL) is 2nd Generation of Intel® Xeon Phi™ Processor

A qubit can represent a 0 and 1 at the same time, a uniquely quantum phenomenon known in physics as a superposition. This lets qubits conduct vast numbers of calculations at once, massively increasing computing speed and capacity. But there are different types of qubits, and not all are created equal. In a programmable silicon quantum chip, for example, whether a bit is 1 or a 0 depends on the direction its electron is spinning. Yet all qubits are notoriously fragile, with some requiring temperatures of about 20 millikelvins-250 times colder than deep space-to remain stable. From a physical point of view, a bit is a physical system, which can be prepared in one of the two different states representing two logical values: based on No or Yes, False or True, or simply 0 or 1.Quantum bits, called qubits, are implemented using quantum mechanical two-state systems, as we stated above. These are not confined to their two basic states but can also exist in superposition. This means that the qubit is both in state 0 and state 1, as illustrated in (Figure 4).Any classical register composed of three bits can store in a given moment, only one out of eight different numbers, as illustrated in (Figure 5). A quantum register composed of three qubits can store in a given momentum of time all eight numbers in a quantum superposition, again as illustrated in (Figure5).Once the register is prepared in a superposition of different numbers, one would be able to perform operations on all of them, as demonstrated in (Figure 6)here. Thus, quantum computers can perform many different calculations in parallel. In other words, a system with N qubits can perform 2N calculations at once!

This has impact on the execution time and memory required in the process of computation and determines the efficiency of algorithms. In summary, A memory consisting of N bits of information has 2N possible states. A vector representing all memory states thus has 2N entries (one for each state). This vector is viewed as a probability vector and represents the fact that the memory is to be found in a particular state level. [9]In the classical view, one entry would have a value of 1 (i.e., a 100% probability of being in this state), and all other entries would be zero. In quantum mechanics, probability vectors are generalized to density operators [10]. This is the technically rigorous mathematical foundation for quantum logic gates [11], but the intermediate quantum state vector formalism is usually introduced first because it is conceptually simpler.However, one question arises about Qubits and that is “Why are qubits so fragile?” and here is what we can say.The reality is that the coins, or qubits, eventually stop spinning and collapse into a particular state, whether it’s heads or tails. The goal with quantum computing is to keep them spinning in the superposition of multiple Furthermore, expanding on Quantum Operations, and we stated concerning this operation, the prevailing model of Quantum Computation (QC) describes the computation in terms of a network of Quantum Logic Gates[12].

Bear in mind that, a quantum computing and specifically the quantum circuit model of computation, a quantum logic gate (or merely quantum gate) is a primary quantum circuit operating on a small number of qubits. They are the building blocks of quantum circuits like classical logic gates are for conventional digital circuits [13].States for a long time. Imagine I have a coin spinning on a table, and someone is shaking that table. That might cause the coin to fall over faster. Noise, temperature change, an electrical fluctuation or vibration-all of these things can disturb a qubit’s operation and cause it to lose its data. One way to stabilize certain types of qubits is to keep them very cold. Our qubits operate in a dilution refrigerator that’s about the size of a 55-gallon drum and use a particular isotope of helium to cool them a fraction of a degree above absolute zero (roughly –273 degrees Celsius) [7].There are probably no less than six or seven different types of qubits, and probably three or four of them are being actively considered for use in quantum computing. The differences are in how you manipulate the qubits, and how you get them to talk to one another. You need two qubits to talk to one another to do large “entangled” calculations, and different qubit types have different ways of entangling.Another approach uses the oscillating charges of trapped ions-held in place in a vacuum chamber by laser beams-to function as qubits. Intel is not developing trapped ion systems because they require a deep knowledge of lasers and optics, which is not necessarily suited to our strengths.Furthermore, quantum computers being built by Google, IBM, and others. Another approach uses the oscillating charges of trapped ions-held in place in a vacuum chamber by laser beams-to function as qubits. Intel is not developing trapped ion systems because they require a deep knowledge of lasers and optics, which is not necessarily suited to our strengths.

For an algorithm to be efficient, the time it will take to execute the algorithm must increase not faster than a Polynomial Function of the size of the input. For example, let us think about the input size as the total number of bits needed to specify the input to the problem, as demonstrated in(Figure 7). The number of bits required to encode the number we want to factorize is an example of this scenario.If the best algorithm we know for a particular problem has the execution time, which can be viewed as a function of the size of the input, bounded by a polynomial, then we say that the problem belongs to Class P, as it is shown in (Figure 7).Problems outside class P are known as hard problems. Thus, we say, for example, that multiplication is in P, whereas factorization is not in P. “Hard” in this case does not mean that it is “impossible to solve” or “noncomputable”. It means that the physical resources needed to factor a large number scale up such that, for all practical purposes, it can be regarded as intractable.However, some quantum algorithms can turn hard mathematical problems into easy ones – factoring being the most striking example as far as we are concerned so far. Such a scenario can be seen in cryptographic technology to be able to decipher a code of cryptic streams or communications. One can see a huge application of it in Rivest, Shamir, and Adelman (RSA) Data Security[8].

The difficulty of factorization underpins the security of what are currently the most trusted methods of public-key encryption, in particular of the RSA system, which is often used to protect electronic bank account, as depicted in (Figure 8).Once a quantum factorization engine, which is a special-purpose quantum computer for a factorizing large number, is built, all such cryptographic systems will become insecure, and they will be wide-open for smart malware to pass through the gate of cybersecurity wall of the system under the attack by this malware.The potential use of quantum factoring for code-breaking purposes has raised the apparent suggestion of building a Quantum Computer (QC), however this not the only application QC. With signs of progress in the utilization of Artificial Intelligence (AI) in the recent decade and pushing toward Super Artificial Intelligence (SAI) to deal with massive sheer of data at Big Data (BD) volume, a need for Quantum Computing versus Classical Computing is felt by the today’s market standard.A supervised AI or SAI with an integrated element of Machine Learning (ML) and Deep Learning (DL) allows us to prosses all of the historical data by comparing them with a collection of incoming data via DL and ML to collect the right information for a proper decision making of the ongoing day-to-day operation and most importantly to be able to forecast future as a paradigm model [14-17].

The impact of quantum computing on developing a better artificial intelligence and its next-generation super artificial intelligence can be seen as ongoing technology efforts by scientists and engineers as a common denominator.Typically, the first quantum algorithms that get proposed are for security (such as cryptography) or chemistry and materials modeling. These are problems that are essentially intractable with conventional computers. That said, there are a host of papers as well as start-up companies and university groups working on things like machine learning and AI using quantum computers. Given the time frame for AI development, “I would expect conventional chips optimized specifically for AI algorithms to have more of an impact on the technology than quantum chips,” says Jim Clarke, Intel’s Head of Quantum Computing. Still, AI is undoubtedly a fair game for quantum computing.

In principle, engines know, how to build a Quantum Computer; we start with simple quantum logic gates as it was described in general previously and connect them up into quantum networks [9] as depicted herein (Figure 9).A Quantum Logic Gate (QLG), like its cousin Classical Logic Gate (CLG), is a straightforward computing device that performs one elementary quantum operation around qubit, usually on two qubits, in a given time. Of course, quantum logic gates differ from their classical counterparts in that they can create and perform operations on quantum superposition as we stated before.As the number of quantum gates in a network increases, we quickly run into some serious practical problems. The more interacting qubits are involved, the harder it tends to be engineering the interaction that would display the quantum properties.The more components there are, the more likely it is that quantum information will spread outside the quantum computer and be lost into the environment, thus spoiling the computation. This process is called decoherence. Therefore, our task is to engineer a sub-microscopic system in which qubits affect each other but not the environment.This usually means isolating the system from its environment as interactions with the external world cause the system to decohere. However, other sources of decoherence also exist. Examples include the quantum gates, and the lattice vibrations and background thermonuclear spin of the physical system used to implement the qubits. Decoherence is irreversible, as it is effectively non-unitary, and is usually something that should be highly controlled, if not avoided.

Considering such constraint, then it is clear which technology will support quantum computation going forward in the future of this technology. Today simple quantum logic gates involving two qubits, as we said, are being realized and recognized in laboratories as stepping forward. Current experiments range from trapped ions via atoms in an array of potential wells created by a pattern of the crossed laser beam to electrons in semiconductors. See (Figure 10) However, the technology of the next decade should bring control over several qubits and, without any doubt, we shall already begin to benefit from our new way of harnessing nature.There are several models of quantum computing, including the quantum circuit model, quantum Turing machine, adiabatic quantum computer, one-way quantum computer, and various quantum cellular automata. The most widely used model is the quantum circuit. Quantum circuits are based on the quantum bit, or “qubit”, which is somewhat analogous to the bit in classical computation. Qubits can be in a 1 or 0 quantum state, or they can be in a superposition of the 1 and 0 states. However, when qubits are measured, the result is always either a 0 or a 1; the probabilities of these two outcomes depend on the quantum state that they were in immediately before the measurement. Computation is performed by manipulating qubits with quantum logic gates, which are somewhat analogous to classical logic gates[9].There are currently two main approaches to physically implementing a quantum computer: analog and digital. Analog methods are further divided into the quantum simulation, quantum annealing, and adiabatic quantum computation. Digital quantum computers use quantum logic gates to do computation. Both approaches use quantum bits or qubits[10] There are currently several significant obstacles in the way of constructing useful quantum computers. In particular, it is difficult to maintain the quantum states of qubits as they are prone to quantum decoherence, and quantum computers require significant error correction as they are far more prone to errors than classical computers[10].

The race is on to build the world’s first meaningful quantum computer-one that can deliver the technology’s long-promised ability to help scientists do things like develop miraculous new materials, encrypt data with near-perfect security and accurately predict how Earth’s climate will change. Such a machine is likely more than a decade away, but IBM, Microsoft, Google, Intel, and other tech heavyweights breathlessly tout each tiny, incremental step along the way. Most of these milestones involve packing more quantum bits, or qubits-the basic unit of information in a quantum computer-onto a processor chip ever. But the path to quantum computing involves far more than wrangling subatomic particles. However, the question of “When will we see working Quantum Computer solving real-world problems?” remains to be answered. The first transistor was introduced in 1947. The first integrated circuit followed in 1958. Intel’s first microprocessor-which had only about 2,500 transistors-didn’t arrive until 1971. Each of those milestones was more than a decade apart. People think quantum computers are just around the corner, but history shows these advances take time. If 10 years from now we have a quantum computer that has a few thousand qubits, that would undoubtedly change the world in the same way the first microprocessor did. We and others have been saying it’s ten years away. Some are saying it’s just three years away, and I would argue that they don’t have an understanding of how complex the technology is.

At the end, it is worthy to say that “Any computational problem that can be solved by a classical computer can also, in principle, be solved by a quantum computer. Conversely, quantum computers obey the Church–Turing thesis; that is, any computational problem that can be solved by a quantum computer can also be solved by a classical computer. While this means that quantum computers provide no additional power over classical computers in terms of computability, they do, in theory, provide extra power when it comes to the time complexity of solving certain problems.Notably, quantum computers are believed to be able to quickly solve certain problems that no classical computer could solve in any feasible amount of time-a feat known as “quantum supremacy” [10].The study of the computational complexity of problems concerning quantum computers is known as quantum complexity theory.

For more Lupine

Publishers Open Access Journals Please visit our website:

wt u have given that

link add For more Modern Approaches

on Material Science articles Please Click Here:

https://lupinepublishers.com/material-science-journal/

Lupine Publishers| Modern Approaches On Material Science

For High Strength Low Alloy (HSLA) steels or for age-hard enable steels (miraging) the strengthening by precipitation is done before forming operation to increase the yield stress as much as possible. In this publication the advantages of a hardening thermal treatment after forming operation are investigated in a low-cost age-hard enable steel Fe-Si-Ti consistent with automotive application.

Keywords: Age-hard enable, Precipitation, Forming, Ductility

The high strength low alloy (HSLA) steels are a group of low carbon steels with small amounts of alloying elements (as Ti, V, Nb, etc.) to obtain a good combination of strength, toughness, and weldability [1-3]. By the addition of micro-alloying elements (less than 0.5 wt.%), HSLA steels are strengthened by grain refinement strengthening, solid solution strengthening and precipitation hardening [4-8]. Regarding automotive applications, the entire range of HSLA steels are suitable for structural components of cars, trucks, and trailers such as suspension systems, chassis, and reinforcement parts. HSLA steels offer good fatigue and impact strengths. Given these characteristics, this class of steels offers weight reduction for reinforcement and structural components [9-11]. Despite the interest of HSLA, the precipitation hardening is about 100MPa. So the new targets concerning the CO2 emission of vehicles push the steel-makers to develop Advanced High Strength Steels (Dual-Phase, Transformation Induced Plasticity) hardened by multiphase microstructures containing 10 to 100% of martensite [12] which offer a better combination between strength and ductility for an acceptable cost Let’s notice that the Ultimate Tensile Strength (UTS) increases more that the Yield Stress (YS). YS is crucial for anti-intrusive aspect during a crash [12]. In the other side, because there is no phase transformation in Aluminum, age-hardening by precipitation is widely used in Aluminium alloys hardened by various additive elements [see [13] for a review]. The volume fraction of precipitates is several percent’s, the hardening can be increased up to 400MPa, but the ductility decreases rapidly.

In steel, there is only miraging steels which are strengthened by a massive precipitation of a martensitic matrix [see [14] for a review]. Despite the impressive YS (up to 2.5GPa), the uniform elongation is less than 1%. Consequently, no forming operation is possible. In addition, the high contents of nickel (about 18%), of molybdenum and of cobalt make these steels very expensive and so they are never used in automotive industry. On the contrary, in the 60’s authors investigated other kind of steels suitable to be strongly hardened by massive intermetallic precipitation without extracost [15,16]. Among the different investigated system, the Fe-Si-Ti alloys are the most promising. This is the reason why a series of publications has been dedicated to this system in the 70’s by Jack&al. [17-19]. Unfortunately, hardness and compression behaviour have been only reported and a lot of discussions concerned the nature of the precipitates (Fe2Ti, FeSi, or Fe2SiTi) have been reported. More recently a systematic study of precipitation kinetics in a Fe-2.5%Si- 1%Ti alloy in the temperature range 723 K to 853 K (450°C to 580°C), combining complementary tools transmission electron microscopy (TEM), atom probe tomography (APT), and Small-Angle-Neutron Scattering (SANS)) have been carried out [20]. It has been shown that the Heusler phase Fe2SiTi dominates the precipitation process in the investigated time and temperature range, regardless of the details of the initial temperature history.

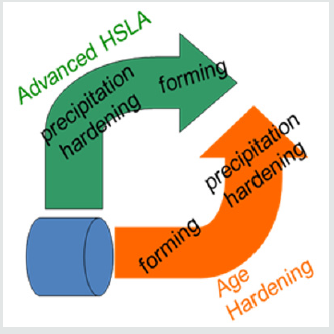

Figure 1: Summary of the different strategies for the use of precipitation hardened steels: The green arrow is the usual one, the orange is the strategy developed in this publication.

Considering that the ductility decreases regularly as a function of the hardening up to 1.2GPa and because it is targeted to obtain a steel suitable for deep drawing the strategy showed in (Figure 1). (orange arrow) has been investigated. The objective is to form a material as soft as possible and as ductile as possible and to obtain the hardening by a thermal treatment after forming. This publication presents the characterization of this way to manage the situation. It is noticed that as Si and Ti promote ferrite, there is no phase transformation whatever the thermal treatment. (Figure 1) Summary of the different strategies for the use of precipitation hardened steels: The green arrow is the usual one, the orange is the strategy developed in this publication.

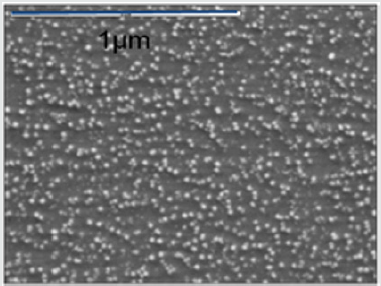

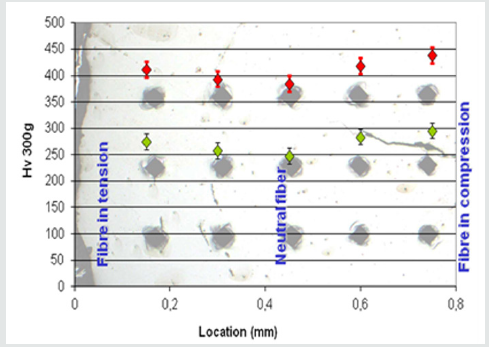

In order to assess for the first time in steel for automotive applications, the hardening have been chosen followed a thermal treatment at 500°C for two hours consistent with the kinetic determined by SANS [20]. As shown in (Figure 2). illustrating the tensile behavior of the steel consisting only in solid solution (i.e. only after recrystallization) is very ductile as for IF steels but with a higher yield stress due to solid solution hardening. After treatment and hardening of 300MPa is obtained with promising ductility. As shown in (Figure 3) the treatment has induced the expected massive precipitation of Fe2SiTi (3.8% weight percent with a radius of 4nm determined by TEM and SANS [20]). To assess the metallurgical route, the (Figure 4). shows that it is possible to severely bend the steel consisting only in solid solution without any defect up to an angle of 180°. Hardness trough the thickness has been measured before and after the thermal treatment (Figure 5). The value confirms the hardening by precipitation. It is noticed that tis precipitation hardening is not sensitive to the strain hardening induced by bending trough the thickness. Because the alloy is dedicated to automotive industry, the drawing must be investigated. This the reason why a cup obtained by deep drawing of 5cm diameter has been manufactured using the steel consisting in solid solution without any problem as illustrated in (Figure 6). It confirms the very high ductility before heat treatment. One another problem in automotive industry is the increase in spring-back with an increase in strength. In addition, it is very difficult to predict or to model this phenomenon. As shown in (Figure 7). this aspect has been studied by a standard test base the forming of a hat-shaped part. It is highlighted that the spring-back during the treatment is weak. That is probably because there is no phase transformation during precipitation and so no internal stresses.

Figure 3: TEM micrography showing the massive precipitation of Fe2SiTi after 2 hours at 500°C (the composition have been determined by APT [20]).

Figure 5: Hardness measurement trough the thickness of the fully bent specimen (i.e. angle of 180°) before and after heat treatment.

For the first time in steel industry for automotive industry a low cost age-hard enable steel has been studied following a strategy based on forming operations before the heat treatment. The bending, the drawability and the spring-back have been investigated highlighting promising results. In addition, the alloy exhibits a cost acceptable for automotive industry. In the future crashworthiness and weldability should be assessed after heat treatment. One of the last advantages is that a lot of parts can be treated at the same time in a furnace usually dedicated to tempering treatment.

For more Lupine

Publishers Open Access Journals Please visit our website:

wt u have given that

link add For more Modern Approaches

on Material Science articles Please Click Here:

https://lupinepublishers.com/material-science-journal/

Thanksgiving is a joyous invitation to shower the world with love and gratitude. Forever on Thanksgiving the heart wil...